Your Evals Don't Matter

You could have the best technically performing AI workflow for a task and see zero impact on your team. This is the sad reality for a lot of folks building internal AI tooling. They assemble impressive systems with amazing engineers, leadership buy-in, and real resourcing. Then it all falls flat in the last mile.We lived this. Several of our own AI initiatives sat in no man’s land for months. Good evals. And you guessed it, no users. That gap forced us to rethink our north star.

The Bench Max Trap

Here is how most AI teams at startups measure success:

- Build a golden dataset.

- Write a comprehensive prompt.

- Pick the model getting the most love on Twitter.

- Run a script to get a score.

- Rinse and repeat until you hit that beautiful 99.999%. (if only)

This feels second nature to us as AI engineers. It’s also a slippery slope. Eval scores tell just a fraction of the story. AI features are still product features. They require user adoption. They require a change in behavior. A perfect score on your benchmark means nothing if nobody opens the d*mn tool!

We switched from model accuracy as our north star to adoption as our proxy for impact.

Ship “Good Enough”

You do not need 95% accuracy to ship. You need to clear the bar of “oh, this is slightly better than what we used to do.” That’s it. That’s your launch threshold.

Engineers want to perfect the model before anyone sees it. We get it. Shipping something half-baked feels wrong. But here’s the trade-off: every week you spend chasing eval improvements in isolation is a week without user feedback. And user feedback is the only thing that moves the needle on real-world accuracy.

The eval-driven roadmap still matters. But it earns its place after you have users generating signal. Accuracy without adoption is a science project. Adoption with mediocre accuracy is a product you can improve.

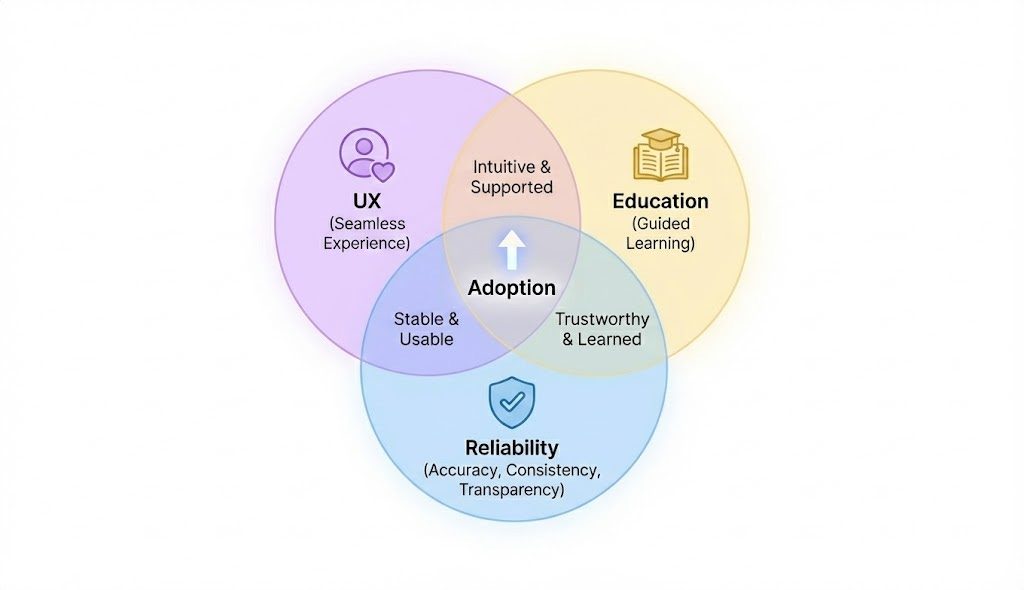

The Adoption Playbook

Here are the 4 stages of AI feature release:

1. No UI, No Problem

Start with a minimal version of your AI feature. This can be as simple as a single prompt to an LLM. It does not need to be perfect. It just needs to clear that “slightly better” bar.

Ship it in production as a background job with an appropriate trigger. Save every result to the database. No UI. No user-facing anything.

This does two things. It stress-tests your pipeline on real data. And it builds the dataset you will need for everything that comes next.

2. Dashboards Before UX

You need a way to measure progress before you ever put a button in front of a user. We track three metrics:

Automation rate. Of the times we ran our workflow, what percentage produced an output? This tells us about coverage and reliability.

Accuracy rate. Of those outputs, how many matched ground truth? Scoring logic here may involve fuzzy matching, normalization, and heuristic matching. Get creative.

Adoption rate. Of those outputs, how often did the user accept them? This starts at zero until you ship a UI. That’s fine. The baseline is the point.

In startups, we build the plane as we figure out how to fly it. Measuring success is no different.

3. Earn the Right to Replace More

Now you build user-facing interfaces. Start minimal. Ship fast.

Your accuracy might not be great yet. That’s okay. Just like any other product feature, you iterate based on feedback. Shipping lets you run usability tests in small groups. You acquire the domain knowledge needed to improve accuracy through these loops. This creates a flywheel: feedback sharpens the model, better results earn more trust, more trust earns adoption.

As accuracy improves, your UX should match. Low accuracy means gentle suggestions in the corner of the screen. High accuracy means the AI output becomes the default. You earn your way from opt-in to opt-out.

4. Make Adoption a Shared Mission

This is where most teams stop short. They ship the feature and wait. But users are busy with their own priorities. They are slow to change behavior. Activation does not happen by accident.

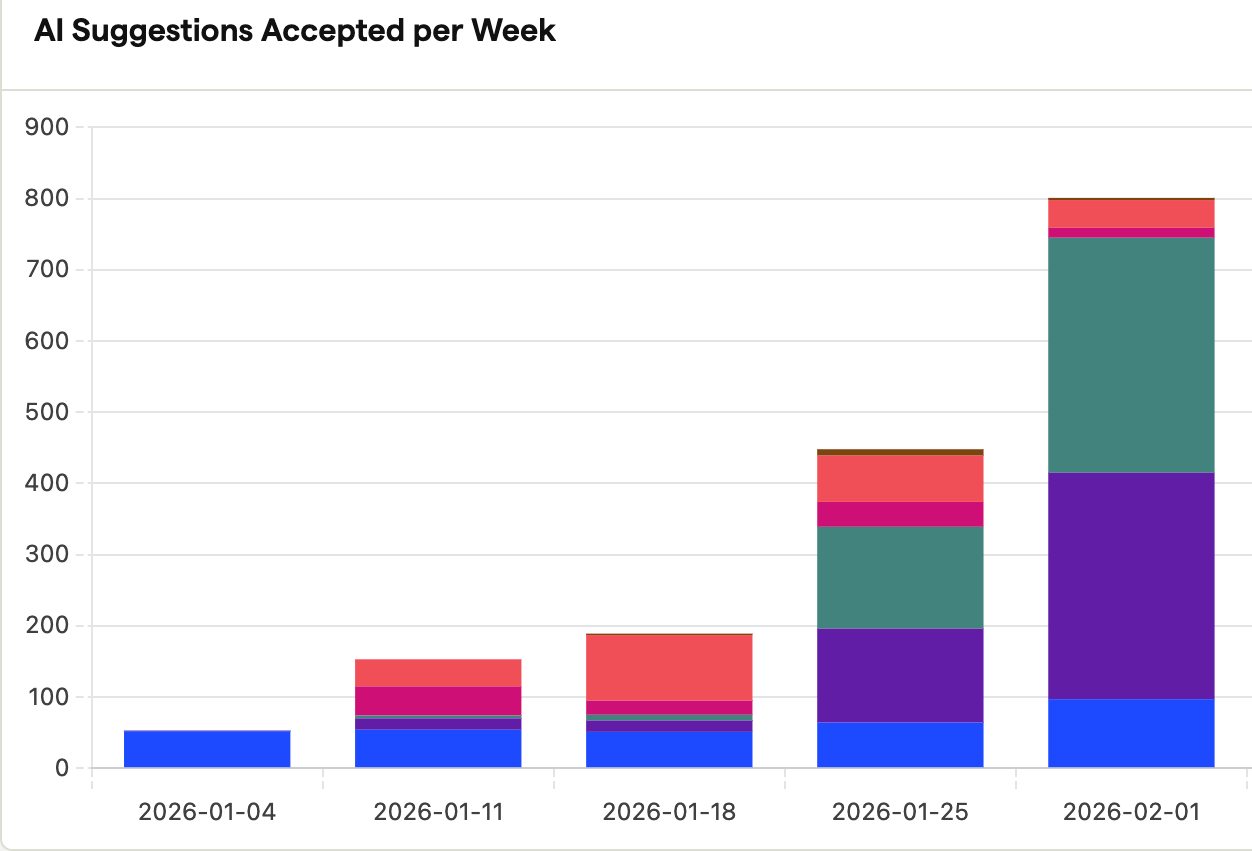

At Shepherd, we set a shared goal across the entire company: 2000% more AI acceptances than last quarter. It’s ambitious enough to feel almost out of reach. The story is still unfolding, but the momentum is real.

Your job is to help every user reach that “aha” moment. Until they experience it firsthand, activating them is an uphill battle. Here is what we are doing to get them there.

Tactics for Activating Users

Building a great AI feature is half the work. The other half is behavior change. Here are the human-factors plays that actually moved our numbers.

Announce relentlessly, but not repetitively. Your users need visibility. Research says people need to encounter something roughly seven times before it registers. But those seven touches need to come through multiple channels with non-identical messaging. Slack posts. Email updates. Demo clips. Lunch conversations. All hands. Vary the format. Keep the message.

Hold enablement sessions. Schedule short sessions to walk teams through new AI features. During each session, require that every attendee tries the feature once with a sample input. Hands-on experience does what screenshots cannot.

Do things that don’t scale. DM every user who hasn’t activated yet. Ask them if they’ve tried the feature. You already know they haven’t. But the psychology of being asked directly makes people feel obliged to give it a shot. Don’t be surprised when someone responds with “what’s that?” That’s your signal, not your failure.

Build education into the UI itself. This can be as simple as a banner. It can be as direct as a popup on first visit. Meet users where they already are → inside the product.

Incentivize the teams who push adoption forward. Recognition, leaderboards, shoutouts in all-hands, performance reviews. Whatever floats your boat. When a team hits an adoption milestone, celebrate it publicly. Adoption is a team sport. Treat it like one.

What Success Feels Like

When adoption becomes a shared metric, something shifts in the company. Everyone watches the same dashboard. We feel excited to talk about AI. We are empowered to share our success. There is asymmetric upside to adapting.

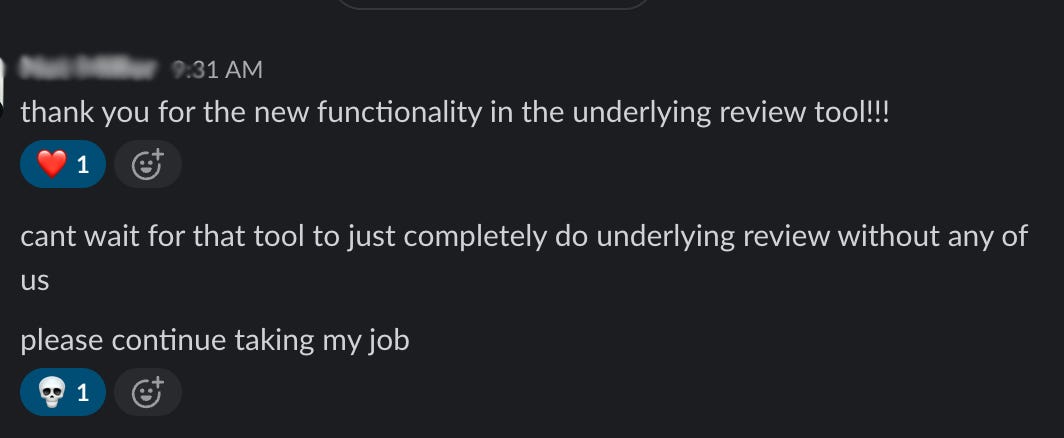

Users start filing feature requests, not just bugs. Someone on the team messages you to say they are grateful. For a feature you thought wasn’t “ready”.

Caution: they may even joke about it taking their job!

This didn’t happen because we cracked some eval threshold. It happened because we treated adoption as the product metric and rallied the entire company around it.

The eval scores matter. But they are a means, not an end. Ship early. Measure adoption. Activate your users. Earn your way into the workflow.